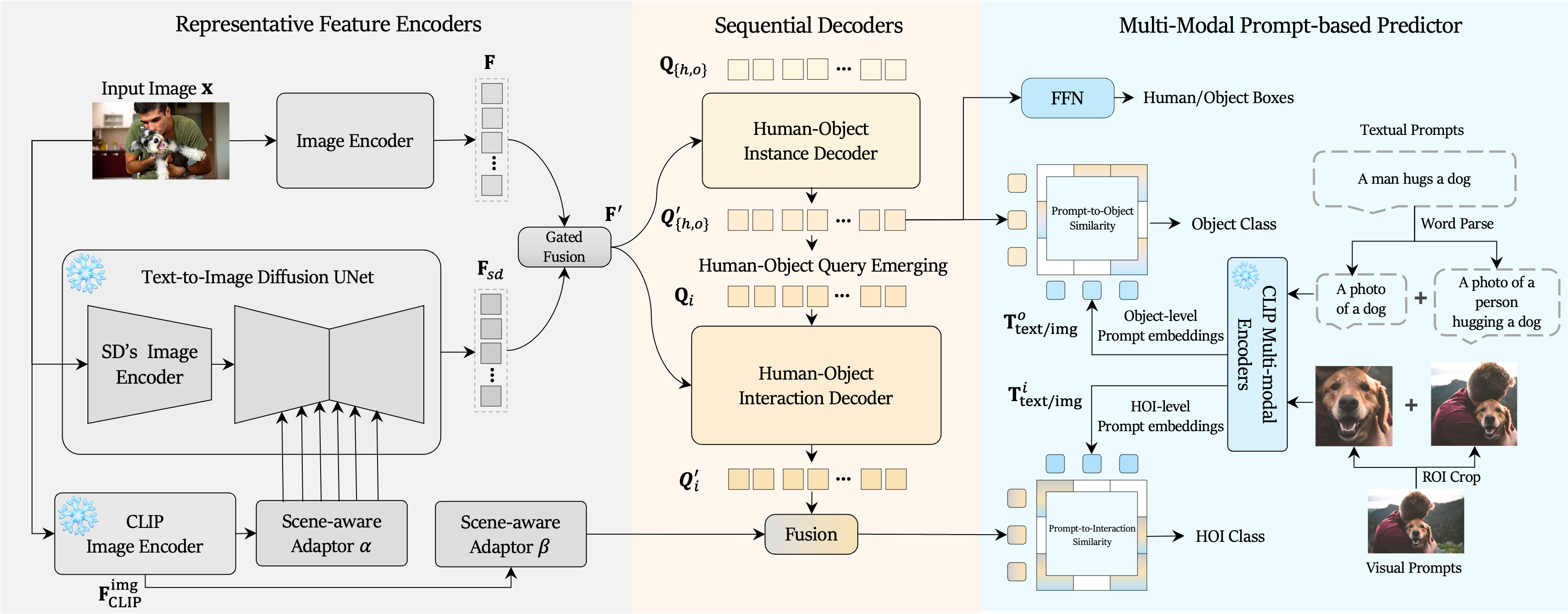

Overview of MP-HOI, comprising a pretrained human-object decoder, a novel interaction decoder, and CLIP-based object and interaction classifiers.

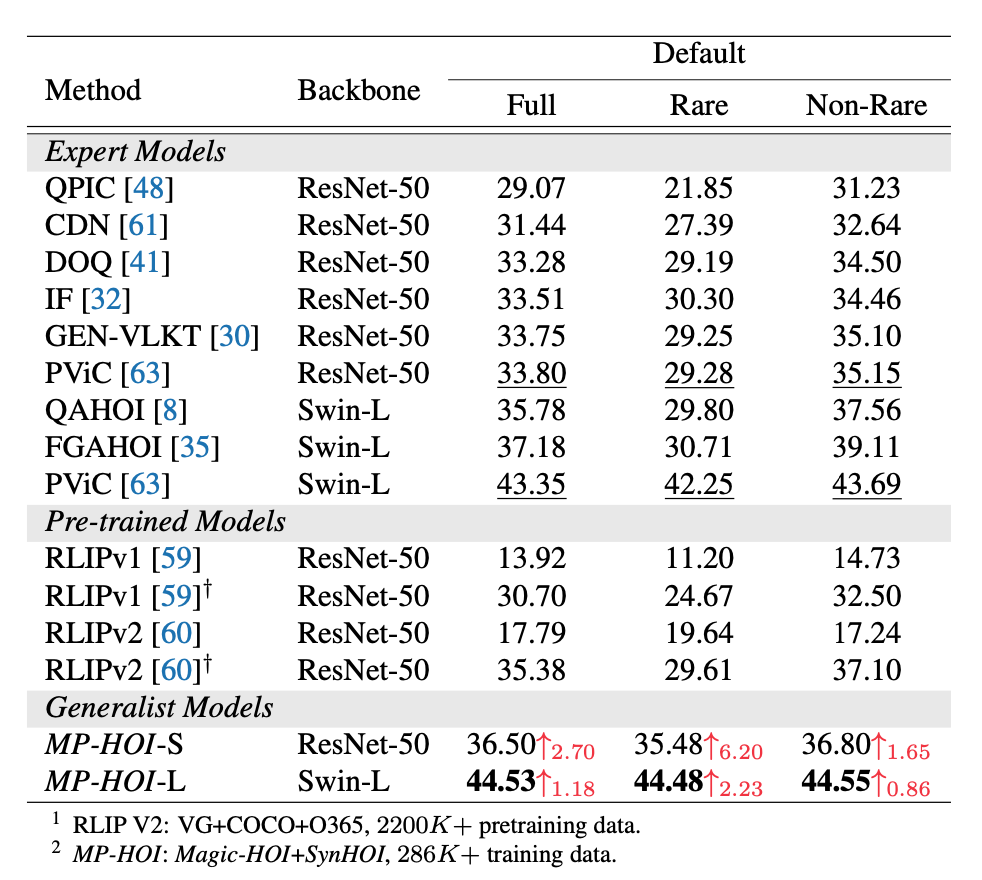

New SOTA

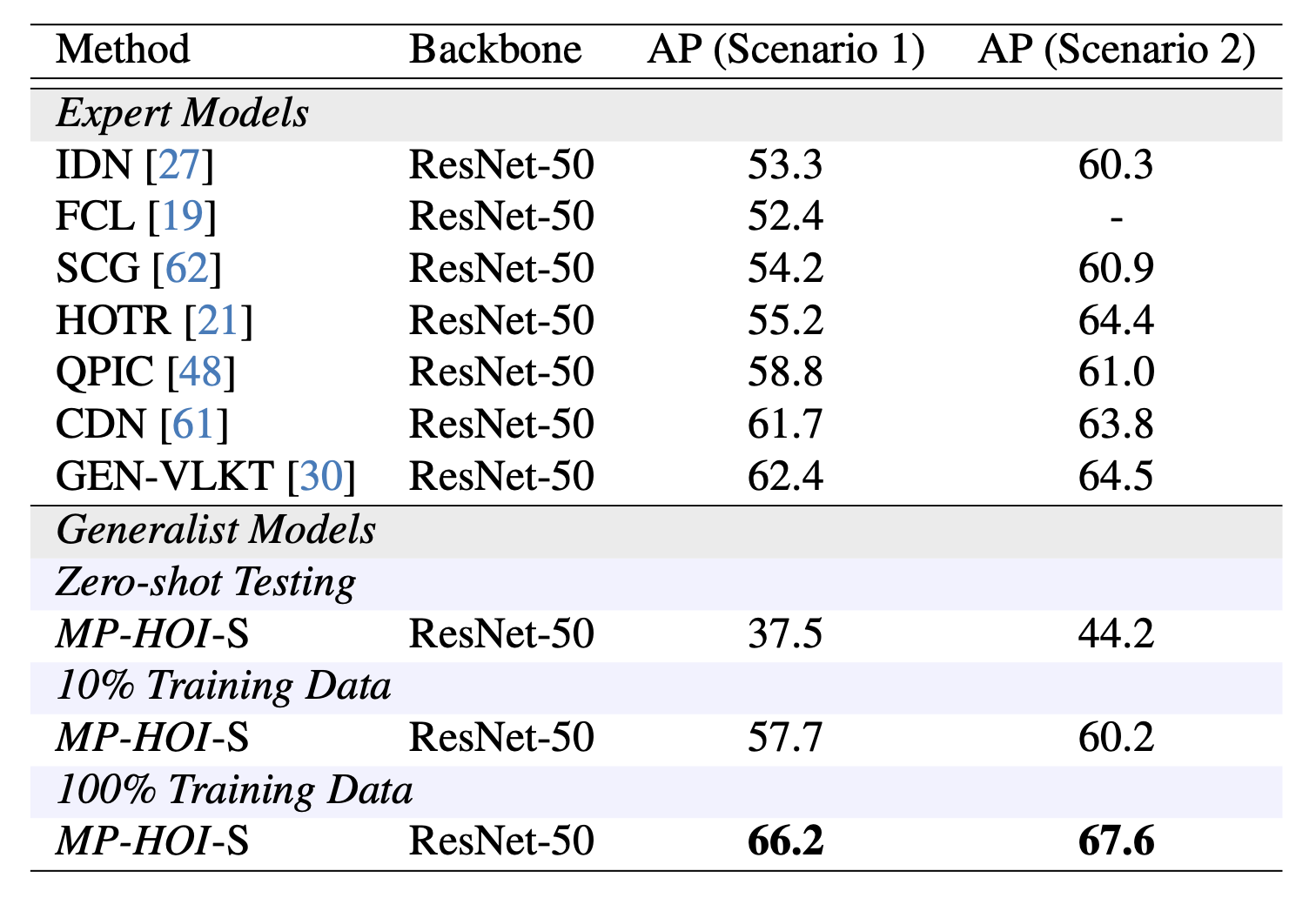

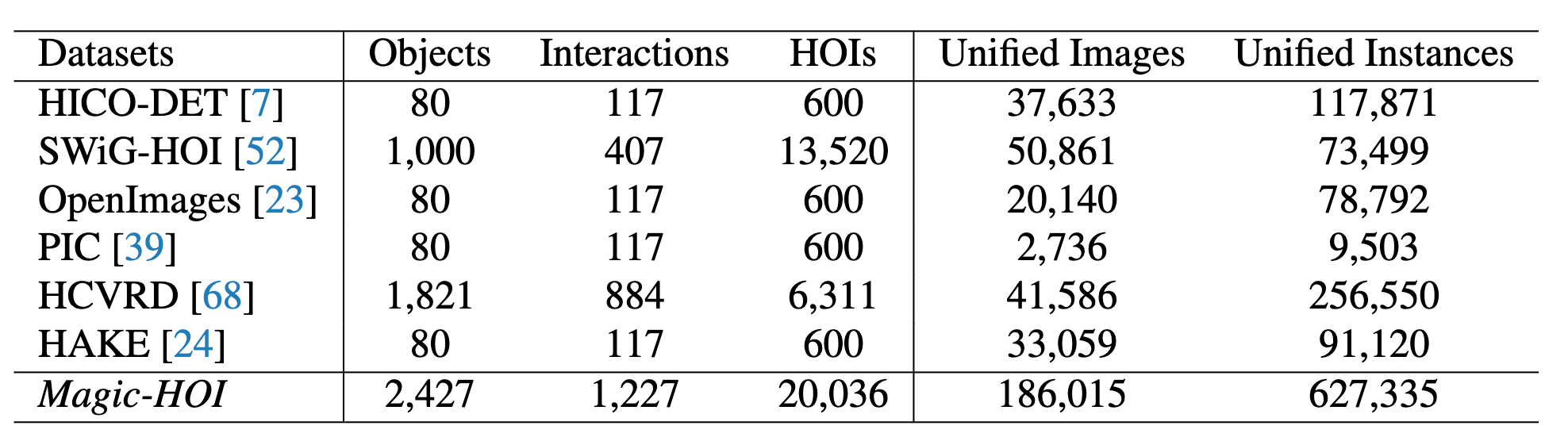

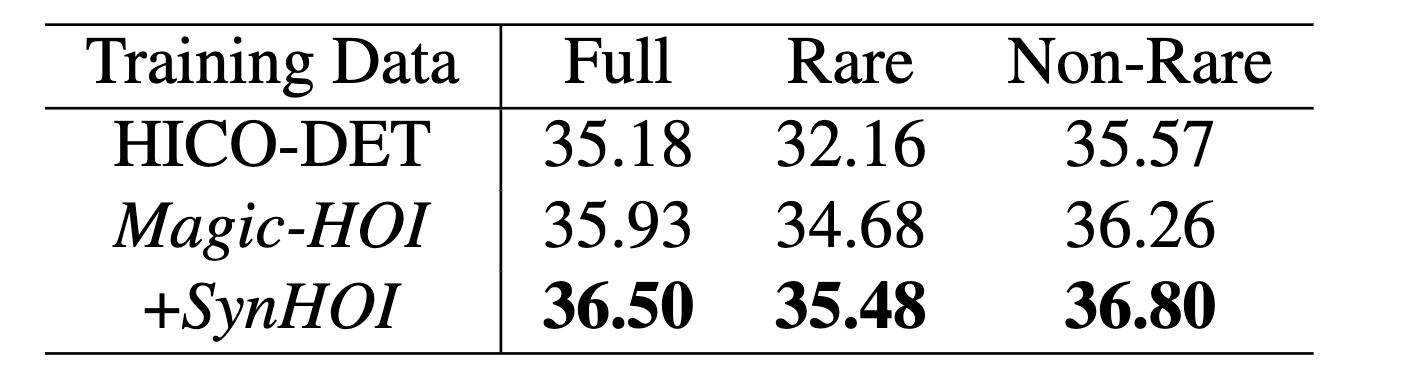

In this paper, we develop MP-HOI, a powerful Multi-modal Prompt-based HOI detector designed to leverage both textual descriptions for open-set generalization and visual exemplars for handling high ambiguity in descriptions, realizing HOI detection in the open world. Specifically, it integrates visual prompts into existing language-guided-only HOI detectors to handle situations where textual descriptions face difficulties in generalization and to address complex scenarios with high interaction ambiguity. To facilitate MP-HOI training, we build a large-scale HOI dataset named Magic-HOI, which gathers six existing datasets into a unified label space, forming over 186K images with 2.4K objects, 1.2K actions, and 20K HOI interactions. Furthermore, to tackle the long-tail issue within the Magic-HOI dataset, we introduce an automated pipeline for generating realistically annotated HOI images and present SynHOI, a high-quality synthetic HOI dataset containing 100K images. Leveraging these two datasets, MP-HOI optimizes the HOI task as a similarity learning process between multi-modal prompts and objects/interactions via a unified contrastive loss, to learn generalizable and transferable objects/interactions representations from large-scale data. MP-HOI could serve as a generalist HOI detector, surpassing the HOI vocabulary of existing expert models by more than 30 times. Concurrently, our results demonstrate that MP-HOI exhibits remarkable zero-shot capability in real-world scenarios and consistently achieves a new state-of-the-art performance across various benchmarks. Our project homepage is available at https://MP-HOI.github.io/.

Large-scale. High-quality data. SynHOI showcases high-quality HOI annotations. First, we employ CLIPScore to measure the similarity between the synthetic images and the corresponding HOI triplet prompts. The SynHOI dataset achieves a high CLIPScore of 0.849, indicating a faithful reflection of the HOI triplet information in the synthetic images. Second, Figure 2(b) provides evidence of the high quality of detection annotations in SynHOI, attributed to the effectiveness of the SOTA detector and the alignment of SynHOI with real-world data distributions. The visualization of SynHOI is presented in the video below.

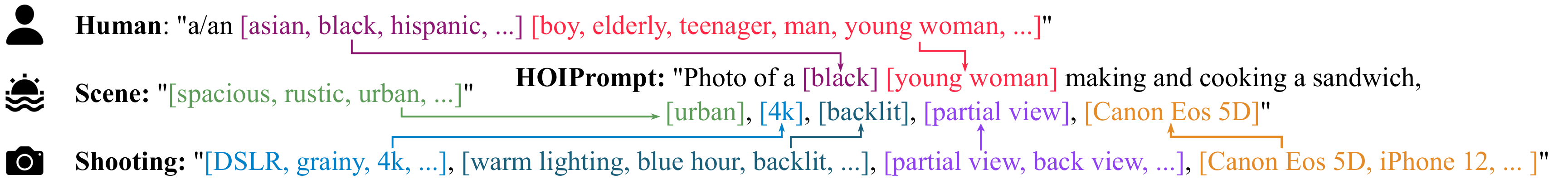

High-diversity. SynHOI exhibits high diversity, offering a wide range of visually distinct images.

Large-scale data with rich categories. SynHOI aligns Magic-HOI's category definitions to effectively address the long-tail issue in Magic-HOI. It consists of over 100K images, 130K person bounding boxes, 140K object bounding boxes, and 240K HOI triplet instances.

@article{yang2024open,

title={Open-World Human-Object Interaction Detection via Multi-modal Prompts},

author={Yang, Jie and Li, Bingliang and Zeng, Ailing and Zhang, Lei and Zhang, Ruimao},

journal={arXiv preprint arXiv:2406.07221},

year={2024}

}